Fixing html2text Quadratic Runtime

25/May 2018Background

I come across this command line utility available in linux called html2text which was first written in 1999 and changed hands later. Obviously an old project, but a solid one. At least it handles a<div><br></div>b properly by outputing a\n\nb, instead of a\n\n\nb like most of the other converters out there. (I’m looking at you, Python html2text.)

I download the source code of v1.3.2a from here and play around it. It is fast, but utf-8 support is lacking, which is a deal breaker for me.

Then I realize the debian version has a utf8 flag so I install it with sudo apt install html2text. Neat. The simple utf8 html input work now. But when I input a 0.05MB html, it takes 15 seconds to process, which is ridiculously slow. Something’s wrong.

Investigation

The straightforward way to kickstart the investigation is to profile. With the source code downloaded and debian patches applied (using Quilt, quite a hassle), I compile the project with debug flags to get ready for profiling using Valgrind/Callgrind. The command looks like this: valgrind --tool=callgrind ./html2text 005m.html

In the first run the functions are all addresses instead of function names, which is weird, since I compiled the project with debug flags already. Then I realize I called the system’s html2text instead of calling the compiled ./html2text. Rookie mistake.

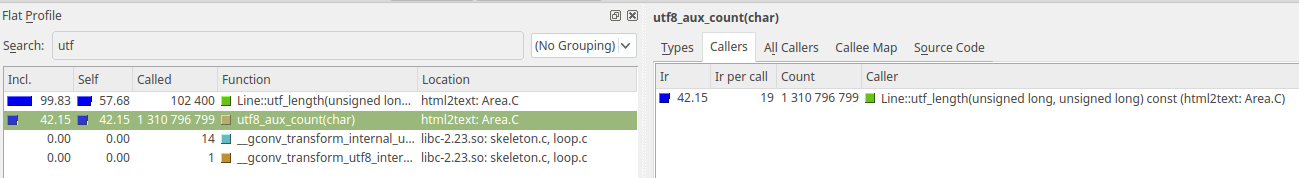

So here’s the screenshot of the successful profiling results shown in KCacheGrind:

Digging deeper

There’s a low-hanging fruit. These 2 functions utf_length and utf8_aux_count occupies most of the runtime. Notice that the call count of utf8_aux_count is crazy high but its Ir per call is as low as 19. Let’s look at the source code of utf8_aux_count.

/* utf_length() and utf_width()

*

* Very simplified algorithm of calculating length of UTF-8

* string. No check for errors. Counting only ASCII bytes and

* leading bytes of UTF-8 multibyte sequences. All bytes like

* 10xxxxxx are dropped. If USE_UTF8 is false then returns

* usual length. --YS

*/

size_t utf8_aux_count(char ch)

{

if((ch & 0xe0) == 0xc0)

{

return 1;

}

else if((ch & 0xf0) == 0xe0)

{

return 2;

}

else if ((ch & 0xf8) == 0xf0)

{

return 3;

}

else

{

return 0;

}

}

Nothing special at utf8_aux_count.

Let’s look at utf_length.

unsigned int

Line::utf_length(size_type f, size_type t) const

{

size_type m = (t < length_ ? t : length_);

size_type r = m - f;

if(USE_UTF8)

{

for (int i = f; i < m; i++)

{

char& ch = cells_[i].character;

size_type aux_count = utf8_aux_count(ch);

r -= aux_count;

i += aux_count;

}

}

return r;

}

utf_length looks cool too.

How about the caller of utf_length?

/*

* Make up "line" into an Area. Attempt to return an Area no wider than "w".

*/

static Area *

make_up(const Line &line, Area::size_type w, int halign)

{

if (line.empty()) return 0;

auto_ptr<Area> res(new Area);

Line::size_type from = 0;

while (from < line.length()) {

/*

* A sole newline character has a special meaning: Append a blank line.

*/

if (line[from].character == '\n') {

res->resize(res->width(), res->height() + 1);

from++;

continue;

}

Line::size_type to = from + 1;

int to_from;

Line::size_type lbp = (Line::size_type) -1; // "Last break position".

/*

* Determine the line break position.

*/

while (to < line.length()) {

if (line[to].character == '\n') {

break;

}

char c1 = line[to].character, c2 = line[to - 1].character;

if (c1 == ' ' || c1 == '('/*)*/ || c1 == '['/*]*/ || c1 == '{'/*}*/ || (

(

c2 == '-' ||

c2 == '/' ||

c2 == ':'

) &&

c1 != ',' &&

c1 != '.' &&

c1 != ';' &&

c1 != ':'

)) {

lbp = to++;

while (to < line.length() && line[to].character == ' ') to++;

} else {

to++;

}

if (line.utf_length(from,to) > w && lbp != (Area::size_type) -1)

{ to = lbp; break; }

}

to_from = line.utf_length(from,to);

/*

* Copy the "from...to" range from the "line" to the bottom of the "res"

* Area.

*/

Area::size_type x = 0;

Area::size_type len = to - from;

if (halign == Area::LEFT || to_from >= w) { ; } else

if (halign == Area::CENTER) { x += (w - to_from) / 2; } else

if (halign == Area::RIGHT) { x += w - to_from; }

res->insert(line.cells() + from, len, x, res->height());

/*

* Determine the beginnning of the next line.

*/

if (to == line.length()) break;

from = to;

if (line[from].character == '\n') {

++from;

} else

if (line[from].character == ' ') {

do {

++from;

} while (from < line.length() && line[from].character == ' ');

}

}

return res.release();

}

This line catches my eye.

if (line.utf_length(from,to) > w && lbp != (Area::size_type) -1)

{ to = lbp; break; }

It is called inside a while loop with from unchanged and to increasing. Calling line.utf_length(from,to) is potentially doing many redundant calculations since utf_length(a,b) == utf_length(a,c) + utf_length(c,b). There is no need to measure from from every time. The redundant calls lead to a quadratic runtime.

Solution

The solution is to cache the old result and use it in the next calculation of line’s utf_length.

The code looks like this:

Line::size_type last_to = from;

int last_utf_len = 0;

... // Omitted

while (to < line.length()) {

... // Omitted

int utf_len = last_utf_len + line.utf_length(last_to, to);

if (utf_len > w && lbp != (Area::size_type) -1)

{ to = lbp; break; }

last_to = to;

last_utf_len = utf_len;

}

The fix is simpler than it sounds.

Result

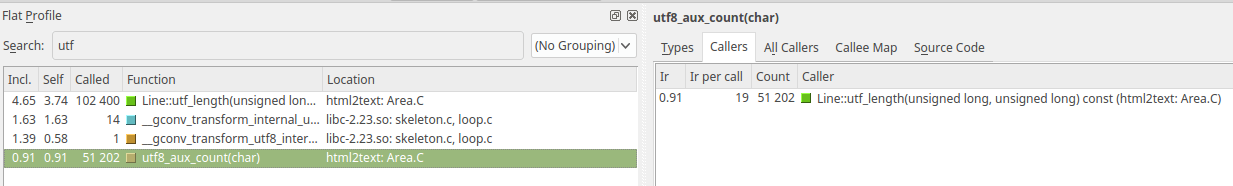

Now let’s profile the code with the same input again.

The call count of utf_length remains the same while the call count of utf8_aux_count decreases drastically. With the same 0.05MB input, the runtime goes from 15s to 0.02s.

Blaming

By blaming, I mean something like git blame. Actually, the patch for utf8 support written in 2009 is to blame. This bug had been undetected for years.

Submitting a patch

Now that I fix it, I feel like submitting a patch such that everyone can use a non-ridiculously-slow version of html2text since utf8 is default on.

I use Quilt to prepare the patch and it makes me appreciate git more. I use reportbug to report to the debian package bug report page. I have submitted the report and patch for a few days now, no replies yet. UPDATE 20180709: The patch has been merged!

Afterword

Since my patch is not accepted yet, I look around the bug report page and find another utf-8 support patch which was from 2014. The patch’s author attached the whole nmu.diff here. The patch was too large that the maintainer was reluctant to accept. The fact is, the patch rewrote the utf-8 support and the new code doesn’t have the quadratic runtime problem. Too bad it has not been merged.